The Future of AI in Video Editing: Trends and Predictions

With the explosion of video content, everyone is searching for the next big breakthrough. It’s no surprise that 88% of video marketers now consider video a key element of their strategy.

In 2024, we’re seeing automated video editing taking over tedious tasks, and many AI video editing softwares are providing new ways to enhance creativity.

But the real excitement is about what’s coming.

As we head into 2025, the future of AI in video editing is set to explode with potential.

We are talking about trends such as AI video automation, enhanced content personalization, and AI-driven VFX, which are set to streamline editing processes and revolutionize how we create content and apply complex effects.

In this post, we’ll break down these emerging trends and how they’re set to revolutionize your video projects.

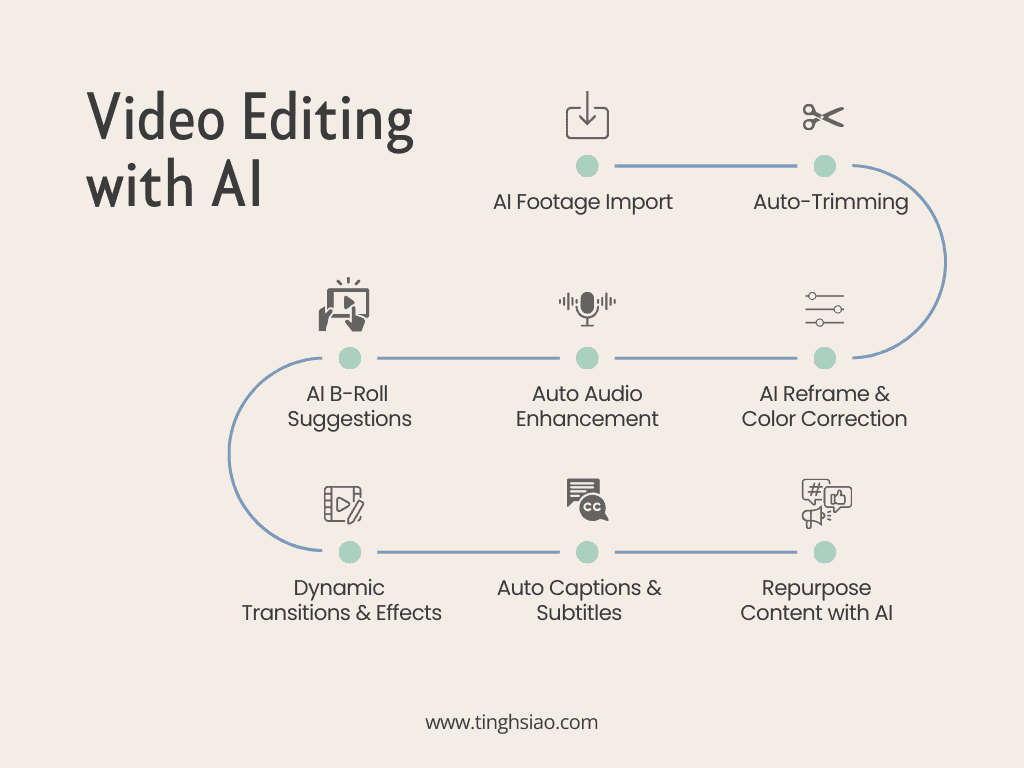

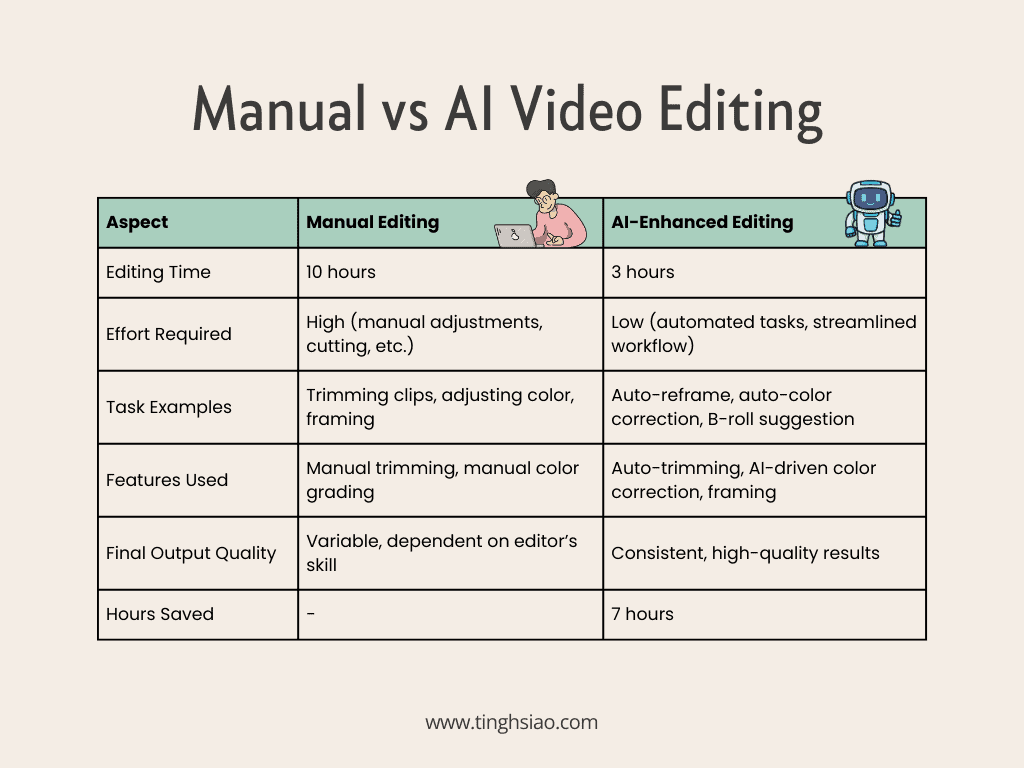

AI-Driven Automation in Editing

AI is revolutionizing video editing by automating tedious tasks, such as adjusting framing, trimming silences, AI background noise removal, and color correction. For example, Adobe Premiere Pro’s Auto-Reframe tool automatically adjusts the framing of video clips to fit various aspect ratios, saving significant time on manual adjustments.

I remember when I first used Gling AI to remove awkward pauses from my footage. What used to take hours of meticulous editing is now handled in minutes, showcasing AI’s efficiency in streamlining these processes.

As AI continues to advance, we can expect even greater transformations in video editing:

- Automated B-Roll Suggestion: Some AI tools are already analyzing main footage to suggest relevant B-roll clips from a library or stock footage collection. This feature not only speeds up the process of finding and inserting supporting visuals but also enhances the narrative flow of the video.

- Smart Editing Assistants: Future AI tools might act as smart editing assistants, understanding complex instructions and making decisions based on style or tone. These tools will handle repetitive tasks, allowing editors to focus on more strategic elements of their projects.

This means we’ll see even more intuitive and intelligent tools that will further enhance our workflows and creative capabilities.

Enhanced Content Personalization

AI is taking personalization to a whole new level. I was blown away when I first saw Vidyard in action. Imagine being able to send personalized video messages to your customers, addressing them by name and referencing their specific interests.

And have you seen what Facebook’s AI can do with your uploaded videos? It creates custom highlight reels based on engagement metrics. I was genuinely impressed by how it picked out the best moments from my footage. It’s like their AI somehow knows exactly what you want to see.

Looking ahead, the potential for real-time content adaptation is particularly exciting. Imagine AI adjusting video content dynamically based on viewers’ reactions. For example, a video could switch scenes if it detects boredom, a concept that might sound like science fiction but is becoming increasingly feasible.

Some examples of the AI-driven personalization may involve:

Dynamic Scene Selection: AI algorithms could choose from pre-recorded scenes based on viewer interest, ensuring that the most engaging content is presented.

Personalized Narratives: Stories might branch out in different directions depending on viewer preferences, similar to interactive shows like “Black Mirror: Bandersnatch.”

These advancements promise to make video content not only more engaging but also more relevant to individual viewers, transforming how we create and consume media.

AI-Assisted Animation and VFX

As someone captivated by the magic of visual effects, I’m excited about how AI is making these tools accessible to everyone. For example, I’ve used Runway to remove backgrounds from my YouTube videos effortlessly. What used to take hours of post-production work was completed in minutes, with stunning results.

Another thrilling development is AI-driven facial animation automation. Tools like Deep Animator enable auto facial motion capture, transforming a simple headshot or character design into a fully animated face. While I’m not a professional animator, I’ve always been intrigued by the subtle nuances of facial expressions in animation. Whether it’s for a talking head segment or adding personality to a virtual avatar, achieving those subtle details traditionally required extensive skill and time.

Looking ahead, the future promises even more groundbreaking advancements in AI-driven VFX and animation:

Advanced AI-Generated Environments: AI could revolutionize virtual world creation by generating entire landscapes with realistic terrain, vegetation, and weather patterns based on simple text prompts. This technology could also enable rapid prototyping of set designs, allowing for more creative experimentation during pre-production.

Hyper-Realistic Character Creation: Future AI advancements might enable AI-driven performance capture, translating an actor’s performance into any digital character, regardless of physical differences. We might also see AI-generated actors delivering nuanced performances, opening up new storytelling possibilities.

Real-Time VFX Rendering: Imagine real-time VFX during filming, allowing directors to see near-final effects and make immediate adjustments on set. Additionally, live VFX for streaming could become a reality, letting content creators apply complex visual effects to live streams and enhance viewer engagement.

Real-Time (Cloud-Based) Collaboration and Feedback

In our globalized world, real-time collaboration has become crucial for creative teams. AI is now making it easier than ever for editors and creators to work together, no matter where they are located.

Frame.io is a prime example of this shift. It allows team members to leave time-stamped feedback directly on the video, reducing back-and-forth emails and speeding up revisions. Its feature for comparing different edits side by side has proven invaluable for remote teams across different time zones.

Similarly, Reduct simplifies the editing of content-heavy videos. By using transcript-based editing, you can cut down hours of footage into concise segments, with the video updating automatically as you edit the transcript. This tool is a big time-saver for projects like interviews.

Of course, relying on internet connections has its challenges. I’ve heard horror stories of crucial review sessions getting derailed by technical issues. So, it’s always good to have a backup plan. But the upsides far outweigh the downsides. AI is paving the way for even deeper integration into video workflows.

Imagine a future where AI not only assists in editing but predicts potential issues before they happen, offering data-driven solutions. We’re not there yet, but the foundation is being laid today.

AI-Generated Content

The rise of AI-generated video content is both exciting and a little unnerving.

I recently experimented with InVideo, where I saw how inputting a brief and choosing a template allows an AI assistant to guide the editing process. Videos that used to take days to produce are now being completed in hours. It’s efficient, but it does make me wonder about the future of creativity in our field.

I also had a chance to see Runway ML’s text-to-video generation capabilities in action. Watching it create drone footage with impressive quality and precision was eye-opening. As these tools continue to advance, they could revolutionize filmmaking in unprecedented ways—imagine AI generating an entire movie!

Looking ahead, AI might be able to help with the following aspects of video generation:

Hyper-Realistic AI-Generated Actors: AI could lead to the emergence of hyper-realistic digital performers. Directors might create and fine-tune virtual actors tailored to specific roles, reducing casting costs and time. AI could also handle complex stunts with digital doubles, enhancing safety on set.

AI-Powered Virtual Production: AI might transform filmmaking through virtual production. Intelligent set design could enable real-time creation and modification of virtual sets based on director input. Additionally, AI could dynamically adjust lighting and atmospheric effects to enhance mood and storytelling.

Now, I’m not saying AI is going to replace us all overnight. Far from it. I remember watching a video comparison between an AI-generated ad and one created by a human team. While the AI ad was technically sound, it lacked the emotional depth that only human experience can provide. It’s a reminder of why storytelling matters—connecting on a human level is something AI can’t quite replicate.

Improved Accessibility Features

One of the most exciting developments in AI-powered video editing is the potential for enhanced accessibility features. When I first started my AI channel, I didn’t think much about making content accessible. I didn’t include captions or anything like that until I realized how AI could simplify this process.

Tools like CapCut now offer automatic subtitles, which make it easier for creators to include captions and reach a broader audience. This is invaluable for viewers with disabilities and those who watch videos silently while commuting or scrolling in bed. When I started providing quality captions, my views and watch time significantly increased.

But here’s where it gets really exciting: AI-powered translation. I recently used a tool called HeyGen that auto-translated my videos into Spanish and Mandarin with surprising accuracy. This means creators can now reach a global audience without the need for manual translations or extra resources.

In the future, AI might even enable viewers to select their preferred language and get real-time, accurate translations directly. For creators, this means our content can reach a more diverse audience. For viewers, it means access to a wider range of content that might have been previously inaccessible. It’s a win-win.

This brings us to the ethical side of AI-generated content. As we explore this topic, we’ll discuss how creators can navigate these advancements while staying true to their values and maintaining their audience’s trust.

Ethical Considerations and Authenticity

As AI-generated content becomes more common in video editing, ethical considerations around authenticity and representation are increasingly important. The rise of deepfakes and synthetic media has creators and consumers questioning what real content is and how AI impacts trust in media.

For example, in early 2024, deepfake videos featuring a fake Tom Cruise went viral on TikTok, showcasing him performing magic tricks and telling jokes. While these were intended for entertainment, they highlighted the risks of misuse.

Similarly, during the New Hampshire primary, thousands of AI-generated robocalls impersonating President Joe Biden were sent to Democratic voters, discouraging them from voting. This incident underscored the potential for deepfakes to manipulate electoral processes and public perception.

The issue of nonconsensual deepfake pornography is also alarming. A 2023 study revealed that 98% of deepfake videos online were nonconsensual, with 99% targeting women, including celebrities like Taylor Swift. This trend shows the troubling ways deepfakes can exploit and harm individuals, particularly women.

In another instance, in the summer of 2023, New Jersey teenagers created AI-generated nude images of their classmates, leading to legal action and raising concerns about the misuse of AI among youth. This incident highlights the urgent need for education on the ethical use of AI technologies.

As AI continues to advance, discussions around representation in AI-generated content will be vital. If AI systems are trained on biased datasets, the resulting content may perpetuate stereotypes or exclude marginalized voices. Creators must actively seek diverse perspectives to ensure their use of AI aligns with values of inclusivity and authenticity.

Preparing for AI Trends in Video Editing

So, how do we prepare for these new trends about future of AI in video editing?

Here’s my advice:

Familiarize Yourself with AI Tools

Start by getting to know the AI features in software you already use. Popular video editing programs such as Adobe Premiere Pro and Final Cut Pro now offer AI-powered features, so take time to explore their capabilities and experiment with different techniques. Additionally, keep an eye out for new AI video editing tools.

Explore Dedicated AI Editing Tools

Now, don’t just stop there. Explore dedicated AI editing tools like Gling AI or Vmaker AI. I was skeptical about Gling AI ability to edit video by editing text, but it’s become my go-to for quick edits to create a rough draft.

Commit to Continuous Learning

Set aside an hour each week to stay updated on AI developments. For example, I follow industry blogs and newsletters that cover the latest trends and breakthroughs. Additionally, subscribing to YouTube channels with AI tutorials and expert insights can enhance your understanding. Participating in webinars is another great way to interact with professionals and ask real-time questions. Continuous learning is crucial to staying ahead of the curve in a rapidly evolving industry.

Experiment and Adapt

Experiment, experiment, experiment! I can’t stress this enough. Don’t hesitate to test out various AI tools to see how they fit into your workflow. For example, if you’re working on a dialogue-heavy project, try using auto transcription features to save time.

Experimenting with different tools will help you identify which ones best complement your editing style and enhance your creative process.

Remember Your Creativity

Remember, AI is a tool, not a replacement for your creativity. Use it to enhance your work, not define it.

Stay curious, keep learning, and don’t be afraid to push boundaries. The future of video editing is exciting, and with these preparations, you’ll be ready to ride the AI wave like a pro.